From the perspective of the general public and society as a whole, so-called Artificial Intelligence (AI) was largely invisible until OpenAI removed the veil over GPT-3.5/ChatGPT in 2022.

Since then, the interest and use of AI, and General Purpose AI (GPAI), has exploded. AI implementations are creeping in everywhere, to great benefit in many respects. However, the warning signs are many — manipulated images, fabricated conversations, falsified news stories, and fake video-presented events can lead to unforeseeable negative consequences, for instance in influencing democratic elections.

This is also the case when AI is used to make decisions, since we know that there is always a risk of “AI hallucinations” where AI software produces incorrect or misleading information.

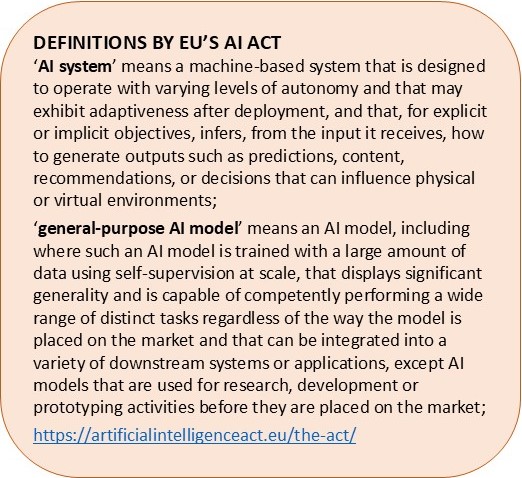

This simplified outline can serve as background for the EU’s AI Act, whose purpose is to put a societal control over the use and influence of AI/GPAI in particular, and the big tech companies in general.

So let’s dig into the matter, with the aim to describe what EU AI Act is, and to clarify its consequences, if any, for Runbox.

AI is but one element in a comprehensive EU concept: The Digital Decade

EU’s AI Act — or Artificial Intelligence Act — came into force on August 1, 2024 . It is the world’s first proposal for a legal framework that specifically regulates AI.

Of course, this ground-braking law didn’t come out of the blue: On the contrary, the process started as early as 2018, when the Coordinated Plan on Artificial Intelligence was published. This strategic plan is a joint commitment between the Commission, EU Member States, Norway, and Switzerland, to maximize Europe’s potential to compete globally.

An important step on the way to the AI Act was the European Declaration on digital rights and principles for the Digital Decade, signed by the Presidents of the Commission, the European Parliament and the Council on December 15, 2022. Here a set of digital rights and principles that reflect EU values and promotion of a human centric, secure, and sustainable vision for the digital transformation, was adopted.

It is noteworthy that the heading of the first chapter of the Declaration is Putting people at the center of the digital transformation! This is in the core of The European strategy for data: “The strategy for data focuses on putting people first in developing technology, and defending and promoting European values and rights in the digital world.”

Then we have The Digital Decade policy programme, with concrete targets and objectives for 2030. The Digital Decade is a comprehensive framework that guides all actions related to Europe’s digital transformation. The aim of the programme is to ensure all aspects of technology and innovation work for people.

Among targets for 5 areas for digital transformation of businesses, artificial intelligence is one, where 8% of businesses using AI per 2023, is to increase to 75 % in 2030. That is 6 years from now (2024).

The EU has the ambition to “to make the EU a leader in a data-driven society“, and accordingly multiple processes have been carried out, resulting in multiple directives and regulations, some of which are discussed in the article above.

It is too extensive to go into all of them here, but if you are interested in learning more you may enjoy reading the following links:

- European Commission: Europe’s Digital Decade

- European Commission: A European strategy for data

- European Commission: Data Act

So, let us now concentrate on The AI Act!

The AI Act – a solution to the impossible?

Artificial intelligence has the potential to create great gains for society, among other things by making it more efficient, providing us with new knowledge, and supporting decision-making processes. At the same time, the use of unreliable AI systems can undermine our rights and values.

The objective of the AI regulation is to ensure that the AI systems that are put into use are developed in line with our values, that is human centric and reliable, and ensuring a high level of protection for health, safety, fundamental rights, democracy, the environment, and the rule of law from the harmful effects of such systems.

But how do you regulate an area that is in rapid and unpredictable development, driven by big, financially strong, and mutually competing tech companies? Surely this must have been an overarching question among EU lawmakers when they set out to create what was going to be the AI Act.

It seems like an impossible task.

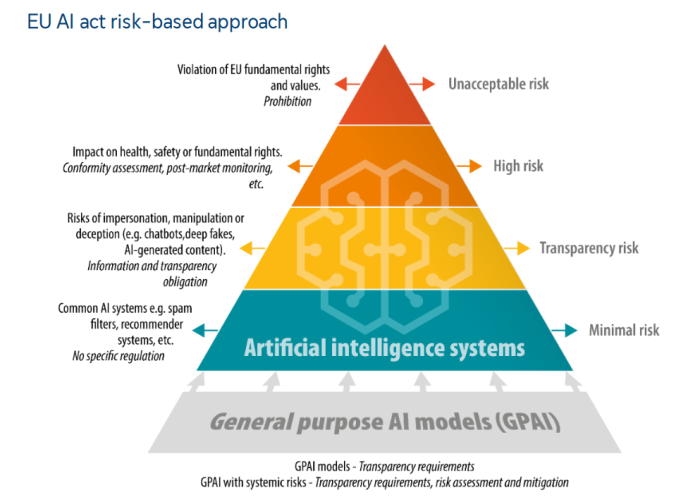

The solution they came up with was to avoid technological phrases and set limitations for possible future AI-features, but instead put the risks that AI may have for individuals and society in focus — that is, the requirements a system must meet depend on how big of a risk the system poses.

In general, and in this context as well, risk means the combination of the probability of an occurrence of harm and the severity of that harm.

You may ask, what are the objects or conditions that are at risk regarding AI? We find the answer in the Charter of Fundamental Rights of The European Union and the following Preamble:

The peoples of Europe, in creating an ever closer union among them, are resolved to share a peaceful future based on common values.

Conscious of its spiritual and moral heritage, the Union is founded on the indivisible, universal values of human dignity, freedom, equality and solidarity; it is based on the principles of democracy and the rule of law. It places the individual at the heart of its activities, by establishing the citizenship of the Union and by creating an area of freedom, security and justice.

The 4-tier Risk Model

As stated in Press Release from Council of the EU 21/05/2024: “The AI act is a key element of the EU’s policy to foster the development and uptake across the single market of safe and lawful AI that respects fundamental rights.“

So what does this model look like?

The figure below illustrates the model, and we will comment on each level further down.

The four levels of the AI Act:

PROHIBITED AI PRACTICES/UNACCEPTABLE RISK AI SYSTEMS: Some AI systems are deemed contrary to the values and fundamental rights of the EU and is prohibited.

The overview of prohibited systems (AI practices) can be found in Article 5 of the regulation. Examples of prohibitions are:

- AI systems that attempt to influence the user’s subconscious or that deliberately use manipulative or deceptive techniques, with the intention of distorting the behavior of individuals or groups.

- AI systems that exploit vulnerabilities in people or groups based on age, disability or a specific social or economic situation, with the intention of distorting the behavior of individuals or groups in a harmful way.

- Deploying or marketing biometric categorization systems that categorize individuals based on their biometric data to infer race, political opinions, trade union membership, religious or political beliefs, or sexual information (with certain law enforcement exceptions)

HIGH-RISK AI SYSTEMS: According to classification rules in Article 6, AI systems are high-risk when they may affect the safety of individuals or their fundamental rights, which justifies their development being subject to enhanced requirements pursuant to the Union harmonization legislation listed in Annex I.

Examples of high-risk AI systems are the AI systems in the following areas:

- Biometrics, in so far as their use is permitted under relevant Union or national law;

- Critical infrastructure;

- Education and vocational training;

- Employment, workers’ management and access to self-employment

- Access to and enjoyment of essential private services and essential public services and benefits;

- Law enforcement, in so far as their use is permitted under relevant Union or national law;

- Migration, asylum and border control management, in so far as their use is permitted under relevant Union or national law;

- Administration of justice and democratic processes.

LIMITED RISK AI SYSTEMS/TRANSPARENCY RISK: For systems that do not fall under the high-risk definition, there will in some cases be a requirement for transparency. This means that it should be clear and easy to understand that an interaction or system outputs are artificially generated.

Examples are (Article 50):

- AI systems intended to interact directly with natural persons are designed and developed in such a way that the natural persons concerned are informed that they are interacting with an AI system;

- AI systems, including general-purpose AI systems, that generates synthetic audio, image, video or text content, shall be marked and detectable as artificially generated or manipulated;

- The natural persons exposed to an emotion recognition system or a biometric categorization system shall be informed of the operation of the system;

- For AI systems that generates or manipulates image, audio or video content constituting a deep fake, it should be clearly stated that the content has been artificially generated or manipulated.

MINIMAL RISK AI SYSTEMS: Systems that neither fall within the category of unacceptable risk nor high risk, nor are subject to transparency obligations, can be described as systems with minimal risk.

Systems with minimal risk. are, for example, programs such as AI-powered video games or spam filters. For systems with minimal risk, the AI Regulation makes no requirements. However, providers are encouraged to follow ethical guidelines on a voluntary basis. [Source in Norwegian]

Obligations for high-risk systems

In the context of obligations, it is wise to be aware of these four different roles, roughly described like this [Source]:

- Providers/developers are both those who develop an AI system/GPAI system/model, and those who market such systems;

- Deployers/users are bodies who have implemented an AI system for their own purposes;

- End-users are both the people who use AI systems and the people who are influenced by the AI system’s decision-making;

- Importer means a natural or legal person located or established in the Union that places on the market an AI system that bears the name or trademark of a natural or legal person established in a third country;

- Distributor means a natural or legal person in the supply chain, other than the provider or the importer, that makes an AI system available on the Union market.

In addition should be mentioned data subject (an important term in GDPR, and often referred to in the AI Act), defined as an identifiable natural person. A person can be identified directly and indirectly.

Most of the requirements in the AI Regulation apply to high-risk systems only. This applies regardless of whether the providers (developers) are based in the EU or a third country. In addition, if the high risk AI system’s output from a third country provider is used in the EU, the requirements apply. [Source]

Before a High-Risk AI System (HRAIS) can be made available on the EU market, suppliers must carry out a conformity assessment. This must show that the system in question complies with the mandatory requirements in the AI Act, such as requirements for data quality, documentation and traceability, transparency, human supervision, accuracy and robustness. If the system or its purpose changes significantly, the assessment will have to be repeated.

For some AI systems, an independent body will also have to be involved in this process to carry out third-party certification. Suppliers of high-risk AI systems will need to implement quality and risk management systems to ensure they comply with the new requirements. This includes systems to oversee the AI systems after they are put on the market.

It is important to note that the AI Act has a cross-border effect in that actors established outside the EU are covered. Thus, the regulation applies to providers of AI/GPAI systems within the EU, irrespective of whether those providers are established or located within the Union or in a third country. Furthermore, deployers of AI systems located within EU are covered by the regulation, as well as providers and deployers of AI systems based in a third country, where the output produced by the AI system is used in the Union. (See Article 2 for more).

Consequently, the AI Act places responsibility for ensuring compliance on all actors involved, from development to the provision of AI-based services, i.e. providers/developers, deployers/users, importers, distributors, and other third parties in the value chain — with cross-border effect.

So, what about the AI Act from Runbox’ perspective?

We can right away eliminate the relevance of prohibited systems as well as high-risk AI systems, as they are explained in an EU Press Release.

However, AI/GPAI systems where special transparency requirements are adequate, could be an automated chat service for customer support, where response to customer questions is generated for example from our Help Pages, with use of an interactive AI/GPAI system. Then we would need to mark the service as artificially generated, and likewise if such a service generates synthetic audio, image, video, or textual content.

It is also clear that using AI/GPAI systems for spam filtering and other purposes for the management of our own systems fall in the category “Minimal Risk” because such use would have minimal risk to citizens’ “rights and safety” (key words in EU context). The same is the case for using AI/GPAI systems for research purposes, or making documents or images for internal use or our own web pages.

Such use of AI systems would fall under the category “minimal risk”. Accordingly, the AI Regulation makes no specific requirements, and our guidelines will effectively be our our own AI policy.

But, what about the GDPR requirements and the AI Act?

We will discuss that in a forthcoming blog post, but let’s establish here and now: It is clear that the AI Act does not change anything in relation to the GDPR, i.e. the GDPR fully applies to systems that fall under the AI Act.